Content tagged Reddit

An Escher Videogame and other nerdities

posted on 2008-01-22 17:22:47

One of the games I'm most excited about coming out this year is called echochrome. It's coming out for Playstation 3 and the PSP. No US release date has been announced but it will land in Japan on March 19th. While I normally don't bother with writing about games, this one's special. It's one of the most novel concepts for a game I've seen in years. In short: you rotate a scene featuring an Impossible Object such that an automated walking man can navigate it. It's a perspective-based puzzle game. Here look:

Also, everybody is writing about CS Education lately which is awesome considering I've been thinking about it so much. Just look at all this mess:

The Enfranchised Mind article (which may be the best of the bunch) and associated reddit comments

Raganwald' No Disrespect article and associated reddit comments

and Mark Guzdial's take and associated reddit comments

It may sound like a cop-out but I think Abelson and Sussman had this right all along. We're so hopelessly early in the existence of Computer Science as a discipline that we don't have a clue what it really is yet. And when you don't know what something is, it's pretty hard to know how to present it. Or steer it's course. That's all for now.

Finally, a paper got thrown on LTU about a dependently typed version of Scheme. Very intriguing.

Software in the Real World

posted on 2008-01-10 19:28:55

I'm a bit perturbed at the moment and I'm having a hard time figuring out why but it seems to happen to me after reading Spolsky articles and their associated reddit comments. That was the last time I remember having this same sense, at any rate. The sense that I might call "Computer Science scares the shit out of me". Either that or "the real world scares the shit out of me".But on to the Spolsky article. I read Spolsky's article "The Perils of Java Schools" and the comments from when it was posted on reddit. The article is about what it sounds like it is. Joel thinks that schools have dumbed down their CS programs by teaching Java instead of a functional language like LISP or Scheme or a low level language like C. The commenters then get into arguments about Joel being stupid, what the ONE TRUE WAY to teach Computer Science and/or programming is, the reason one set of skills or another is valuable in industry, the difference in industry's goals and academia's, and anything else they see fit to mention.

The argument in comments on these articles is often in fact a mere miscommunication. One side advocates that a good (or great) programmer is found by a seeking out those that are technically adept with things like tail-call recursion and functional programming or low-level bit-hackery and such. The other side advocates finding those that have good design principles and an understanding of architecture/best practices.

The missed point seems to be that the first side (to my thinking) presumes that their conditions ipso facto create the candidate argued for by the second side. That is, the first side thinks if someone understands tail-call recursive functions and pointers than they must have some sense of design to go with their knowledge of abstractions and that this, consequently, makes them good engineers. The second side is missing this fact and arguing that design skill is more important than technical ability. Both are large components though. I do not think Spolsky would advocate hiring programmers who had technical ability but little design skill or design skill and best practices but little technical ability.

After reading these articles however I have to step back and remember that we're talking about Computer Science or Programming both of which ultimately have in mind the creation of software or in Sussman's words a description of a process. That description (software) is supposed to automate work, to create value. And THAT is the scary part.

Computer Science scares me a little because I wonder if I have the necessary chops (and desire) to become a good programmer. It's also scary because it will take me a while to even figure out the answer to that question, probably longer than I'd like. Real life on the other hand scares me a lot more for an entirely different reason that I'll explain by way of confession.

I confess that I have an intense urge to read reddit and I find it very hard to resist. It borders on compulsion. The reason is this: I think I'm lazy. In fact, it's even more than that. I think I'm not going to make it out there. You know, in the real world. There are a few reasons for this. One, it's painted as scary and brutal by a lot of people. Two, and this is the bit about me being lazy, I think the real world is bullshit. Or at least mostly bullshit. It's people trying to find ways to stay busy so they can make money so they can eat and do things they actually care about. This next bit is important so I want to state it carefully:

It's not that I don't think that there aren't people out there getting things done that actually need doing. It's that I think that 90% of human labor is about maintaining the status quo, that maintaining the status quo is a huge waste of time if not for the fact you'd starve otherwise, and that the little last bit that actually creates new value and advances the state of things seems like accident or luck as often as the product of hard work. Moreover, there's no guarantee no matter who you are that you won't just get bad luck and get screwed. THAT is what's scary.

It's scary because I don't want to hate my job and just try to do what's necessary to make it. It's scary because I'd like to be in that little 10% and there is no guaranteed way to get there. And it's scary because the very fact of it is implicitly anti-hope or anti-progress. "90% of the world is about maintaining the world. Good luck."

I read reddit not because I want to avoid my other duties but because I wildly want to believe that somewhere on there I will find the guidance I need to not be a 90% human being. I want to be good at something, produce value, not fear starvation or unemployment, and love my craft. So far, I believe programming to be my best bet. Hopefully, this year off from college will bear that out one way or another.

Thoughts Scattered, Smothered, Covered, etc.

posted on 2007-09-10 13:38:49

I have gone about dedicating myself to invisible empires.I rise and the day brings visions of struts jutting out

of the soil to sustain immeasurable edifices to man.

I am having fun. I can say that much.

To Do list:

Cookies.

Essay on Radical Visions.

Lots of Discrete Mathematics to prepare for Wed test.

Java Programming and C Programming.

Figure out what days are with whom this weekend. Note: skate will be out.

Gym and Laundry.

Read one of the following good things: The Wealth of Networks, Programming the Universe, Open Sources (1 or 2), Infotopia. Also Milosz and Neruda.

Morning Reading:

http://crookedtimber.org/category/benkler-seminar/

http://crookedtimber.org/2004/10/07/long-after-the-new-economy/#more-2316

http://blogs.msdn.com/devdev/archive/2007/09/07/p-complete-and-the-limits-of-parallelization.aspx

http://www.thinkingparallel.com/2007/09/06/how-to-split-a-problem-into-tasks/

http://rc3.org/2007/09/my-old-friend-p.php

http://khigia.wordpress.com/2007/09/07/different-dbms/

http://blog.snaplogic.org/?p=94

http://o20db.com/db/setup/

http://www.joelonsoftware.com/articles/LordPalmerston.html?repost_reason=current_meme

http://www.computerworld.com/action/article.do?command=printArticleBasic&articleId=9034619

http://smoothspan.wordpress.com/2007/08/21/are-you-red-shifted-aka-do-you-use-utility-computing-web-20-and-every-other-cool-thing/

Labor Day Leisure

posted on 2007-09-04 00:52:48

I have to say this labor day has been fairly leisurely. I probably should have done more homework and there are definitely some things I need to get written and posted up on the blog but I'll get to that as soon as I can. I've been feeling a bit under the weather the last 24 or 48 hours. I think I have a bit of a throat thing but hopefully it will pass in another day or two. Here is tonight's fantastic reading:Peer Production Models

http://www.sauria.com/blog/2007/08/25/scalability-concurrency/

http://www.russellbeattie.com/blog/java-needs-an-overhaul

http://intertwingly.net/blog/2007/08/25/Lean-Languages-and-Libraries

http://bitworking.org/news/158/ETech-07-Summary-Part-2-MegaData

http://redmonk.com/sogrady/2007/08/26/links-for-2007-08-27/

I'll try to find my words for all this soon...

The State of State

posted on 2007-08-29 13:20:44

So, I've been having and alluding to discussions with Tim Sweeney of late. I sent him an e-mail a little over two weeks back and I received word back from him about a week ago. It's taken a while for me to digest it a little and ask his permission to post it here but he has been kind enough to grant said permission. So, without further ado, the transcript:Me:

Tim,

My name is Brit Butler. I'm a college student in Atlanta, GA and an admirer of your work. I was very taken with your POPL talk on The Concurrency Problem but curious as to why you mentioned both the message passing model and referentially transparent functions but then went on to mostly talk about the latter with Haskell. I'm certain that you've used and read about Erlang and other message-passing systems and was wondering if you could explain your position on them to me, vis-a-vis Transactional Memory or some other method. I'm assuming you wouldn't be in support of STM because it's ultimately still about sharing state. Thanks so much for your time.

Regards,

Brit Butler

http://www.redlinernotes.com/

Tim:

Hi,

Lots of applications and reasonable programming styles rely on large amounts of mutable state. A game is a great example – there are 1000’s of objects moving around and interacting in the world, changing each frame at 60 frames per second. We need to be able to scale code involving that kind of mutable state to threads, without introducing significant new programming burdens. Transactional memory is the least invasive solution, as it allows writing pieces of code which are guaranteed to execute atomically (thus being guaranteed of not seeing inconsistencies in state), using a fairly traditional style, and it scales well to lots of threads in the case where transactions seldom overlap and touch the same state.

Message-passing concurrency isn’t a very good paradigm for problems like this, because we often need to update a group of objects simultaneously, preserving atomicity. For example, within one transaction, the player object might decide to shoot, issue a command to his weapon, check an ammunition object, remove ammunition from it, and spawn a new bullet that’s now flying through the world. And the sets of objects which may need to atomically interact isn’t statically known – any objects that come into contact, or communicate, or are visible to each other, may potentially interact.

When implementing that kind of code on top of a message-passing concurrency layer, you tend to get bogged down writing numerous message interchanges which really just turn out to be ad-hoc transaction protocols. That’s quite error-prone. Better to just use transactions in that case.

This argument for transactional memory is limited in scope:

For algorithms which can be made free of side effects, pure functional programming (or “nearly pure functional programming” as in Haskell+ST) is cleaner and allows more automatic scaling to lots of threads, without committing to a particular granularity as with message-passing.

For algorithms that need to scale to multiple PCs, run across the Internet, etc, transactions seem unlikely to be practical. For in-memory transactions on a single CPU, the overhead of transactions can be brought down to <2X in reasonable cases. Across the network, where latencies are 1,000,000 times higher, message passing seem like the only plausible approach. This constrains algorithms a lot more than transactions, but, hey, those kinds of latencies are way too high to “abstract away”.

The existing Unreal engine actually uses a network-based message passing concurrency model for coordinating objects between clients and servers in multiplayer gameplay. It even has a nifty data-replication model on top, to keep objects in sync on both sides, with distributed control over their actions. It’s quite cool, but it’s inherently tricky and would add an awful lot of complexity if we used that for coordinating multiple threads on a single PC.

-Tim

So, there you have it. More on all this later. I've got to go play around with Erlang a bit more. I'm hoping Andre Pang and Patrick Logan will help me dig into this a bit deeper in days to come. Who else wants in on the conversation? Comment below.

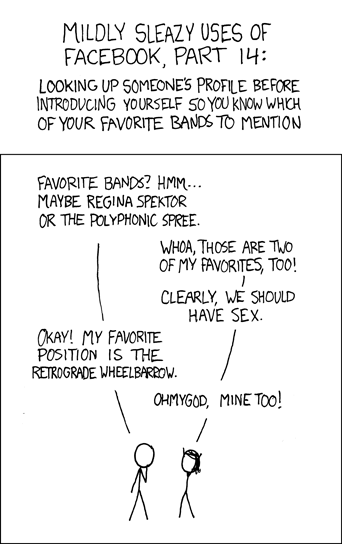

The Funniest Man Alive

posted on 2007-08-08 15:55:24

Seriously, he is. Today:

Additionally, yummy things from reddit.com and the collective planets:

Marc Andreesen on Entrepreneurs and Creativity

A Slashdot Comment that Doesn't Suck. Almost makes me want to start reading slashdot again. Not quite enough somehow.

Good Linux Kernel Mailing List discussions about how Disk I/O is handled. Kerneltrap coverage.

This blog covers 2015, Books, Butler, C, Dad, Discrete Math, Displays, Education, Erlang, Essay, Gaming, Gapingvoid, HTDP, Hardware, IP Law, LISP, Lecture, Lessig, Linkpost, Linux, Lists, MPAA, Milosz, Music, Neruda, Open Source, Operating Systems, Personal, Pics, Poetry, Programming, Programming Languages, Project Euler, Quotes, Reddit, SICP, Self-Learning, Uncategorized, Webcomic, XKCD, Xmas, \"Real World\", adulthood, apple, career, careers, choices, clones, coleslaw, consumption, creation, emulation, fqa, games, goals, haltandcatchfire, heroes, injustice, ironyard, linux, lisp, lists, math, melee, metapost, milosz, music, pandemic, personal, poetry, productivity, professional, programming, ragequit, recreation, reflection, research, rip, strangeloop, vacation, work, year-in-review

View content from 2024-09, 2024-06, 2024-03, 2024-01, 2023-12, 2023-07, 2023-02, 2022-12, 2022-06, 2022-04, 2022-03, 2022-01, 2021-12, 2021-08, 2021-03, 2020-04, 2020-02, 2020-01, 2018-08, 2018-07, 2017-09, 2017-07, 2015-09, 2015-05, 2015-03, 2015-02, 2015-01, 2014-11, 2014-09, 2014-07, 2014-05, 2014-01, 2013-10, 2013-09, 2013-07, 2013-06, 2013-05, 2013-04, 2013-03, 2013-01, 2012-12, 2012-10, 2012-09, 2012-08, 2012-06, 2012-05, 2012-04, 2012-03, 2012-01, 2011-10, 2011-09, 2011-08, 2011-07, 2011-06, 2011-05, 2011-04, 2011-02, 2011-01, 2010-11, 2010-10, 2010-09, 2010-08, 2010-07, 2010-05, 2010-04, 2010-03, 2010-02, 2010-01, 2009-12, 2009-11, 2009-10, 2009-09, 2009-08, 2009-07, 2009-06, 2009-05, 2009-04, 2009-03, 2009-02, 2009-01, 2008-12, 2008-11, 2008-10, 2008-09, 2008-08, 2008-07, 2008-06, 2008-05, 2008-04, 2008-03, 2008-02, 2008-01, 2007-12, 2007-11, 2007-10, 2007-09, 2007-08, 2007-07, 2007-06, 2007-05